The Year of Artificial Intelligence (AI) describes 2023. With the introduction and widespread use of ChatGPT in November 2022 and the continuous development of AI applications across industries ranging from finance to art, comprehensive guidelines are paramount to protecting consumer privacy. AI development, adaptation, and use show no signs of decline.

According to McKinsey’s state of AI in 2022–and a half decade in review survey, between 2017–2022 there was more than double the number of organizations with embedded AI capabilities. However, McKinsey’s survey also reveals that “the proportion of organizations using AI has plateaued between 50 and 60 percent for the past few years.” According to another McKinsey survey about AI in 2023, this plateau was temporary as of the organizations with reported AI adoption, 60% are using generative AI, 40% say because of generative AI, “their companies expect to invest more in AI overall,” and 28% confirm “generative AI use is already on their board’s agenda.” This is a departure from the 50–60% plateau and is indicative of a growth phase for the AI industry. To ensure consumer privacy and allow for widespread innovation and adaptation of new AI technologies, this growth phase must be accompanied by horizontal regulations.

To date, few governments have introduced AI laws to protect consumers from the growing use of AI across industries. The European Union (EU) is a global leader in privacy legislation and is one of the first to introduce horizontal AI legislation–The AI Act. The Members of the European Parliament (MEPs) and the Council reached a political deal on the AI Act on December 8–9, 2023. The bill still requires formal approval by the European Parliament and the Council. Once both institutions approve, the EU adopts the bill into law. The AI Act is a horizontal legislation that is leading the world into a future where, ideally, industry-agnostic AI innovation is minimally restricted, and consumer privacy is considered and protected.

Key Press Releases

Legislation Timeline

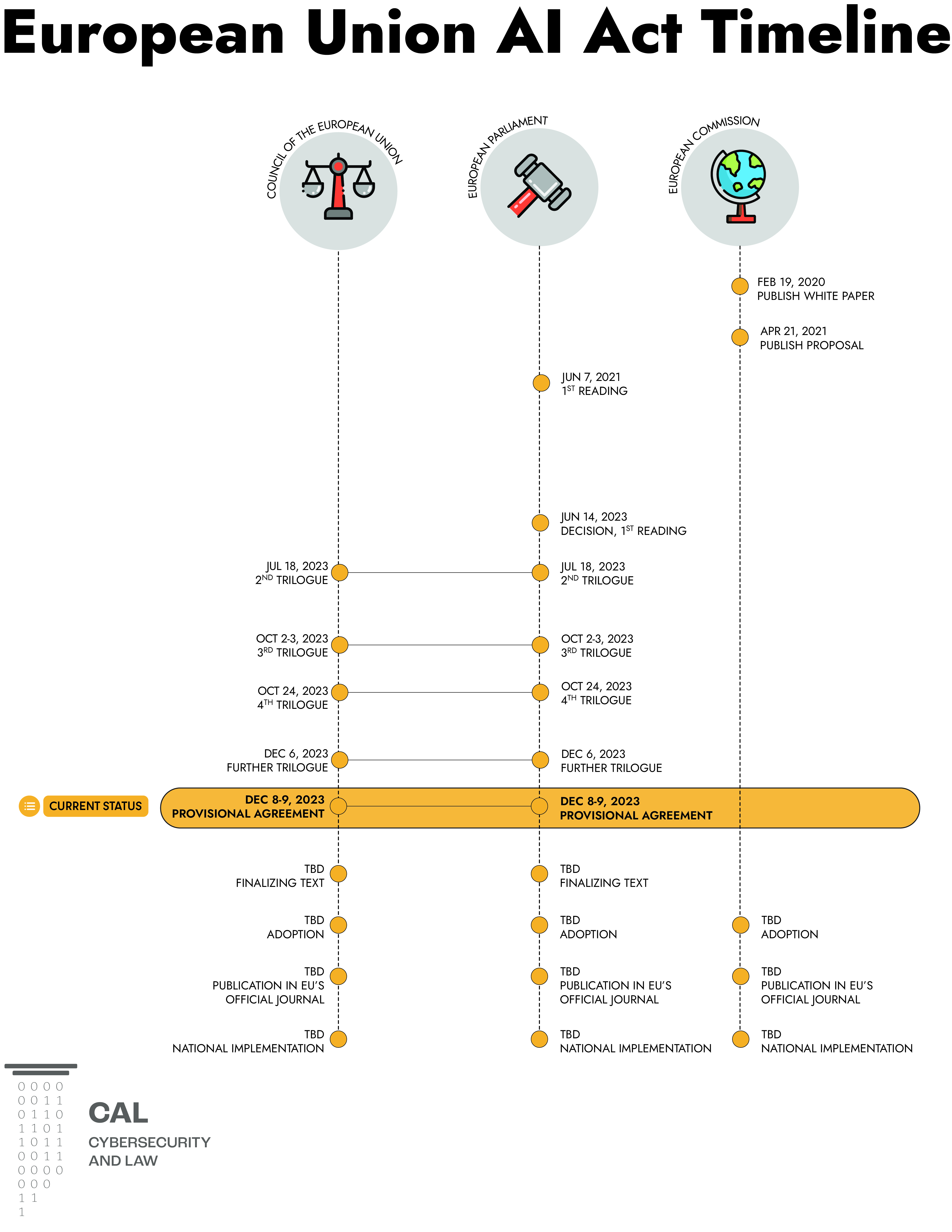

The infographic below provides an overview of the history of the AI Act up to the provisional agreement on December 8–9, 23 and the next steps for adoption in the EU.

Background

In 2017 the EU Council called on the Commission to address the emerging AI trend. Since then, the Commission published a White Paper describing Europe’s stance on AI innovation and regulation. On December 8–9, 2023, the European Union (EU) reached a provisional agreement on the Artificial Intelligence Act (AI Act).

This agreement moves the needle forward on the world’s first horizontal AI legislation. If the legislation is adopted it could pave the way for AI regulation across the world. The goal of the AI Act is to protect “fundamental rights, democracy, and the rule of law and environmental sustainability” from high risk AI. To accomplish this, the AI Act provides a set of rules for developing and implementing AI for high risk AI based on the associated risks and consequences. Without implementing and enforcing these rules the legislation is ineffective. Therefore, to implement and enforce AI legislation, including the new AI Act, the EU created a new office called the European AI Office on December 8–9, 2023.

Notably, the legislation specifically aims to protect environmental sustainability, which will likely be an increasingly important problem with developing and using more resource-intensive AI applications. Figuring out a way to legislate the resource consumption of programs can reduce the effects that intense computing has on the planet and improve the availability and reliability of AI solutions in the future.

Although the European Parliament and the Council agreed on the final version of the AI Act on December 8–9, 2023, it has yet to be publicly available as the European Parliament and Council need to vote on the final version before the act is adopted. However, co-rapporteur Dragoș Tudorache offered insights into what we can expect from the legislation. Dragoș Tudorache said the EU AI provides a “balance between protection and innovation” in the press conference.

Tudorache also identified legislation’s ability to stifle AI innovation, which could limit advancements in AI technology. However, allowing AI developers to develop, train, and release AI programs without any guidelines has the potential to have severe consequences. Thus, the stated goal of the AI Act is to provide a balance between restrictions on innovation and consumer protection, which is necessary and, if successful, is an optimal solution to protect consumers and allow AI to progress.

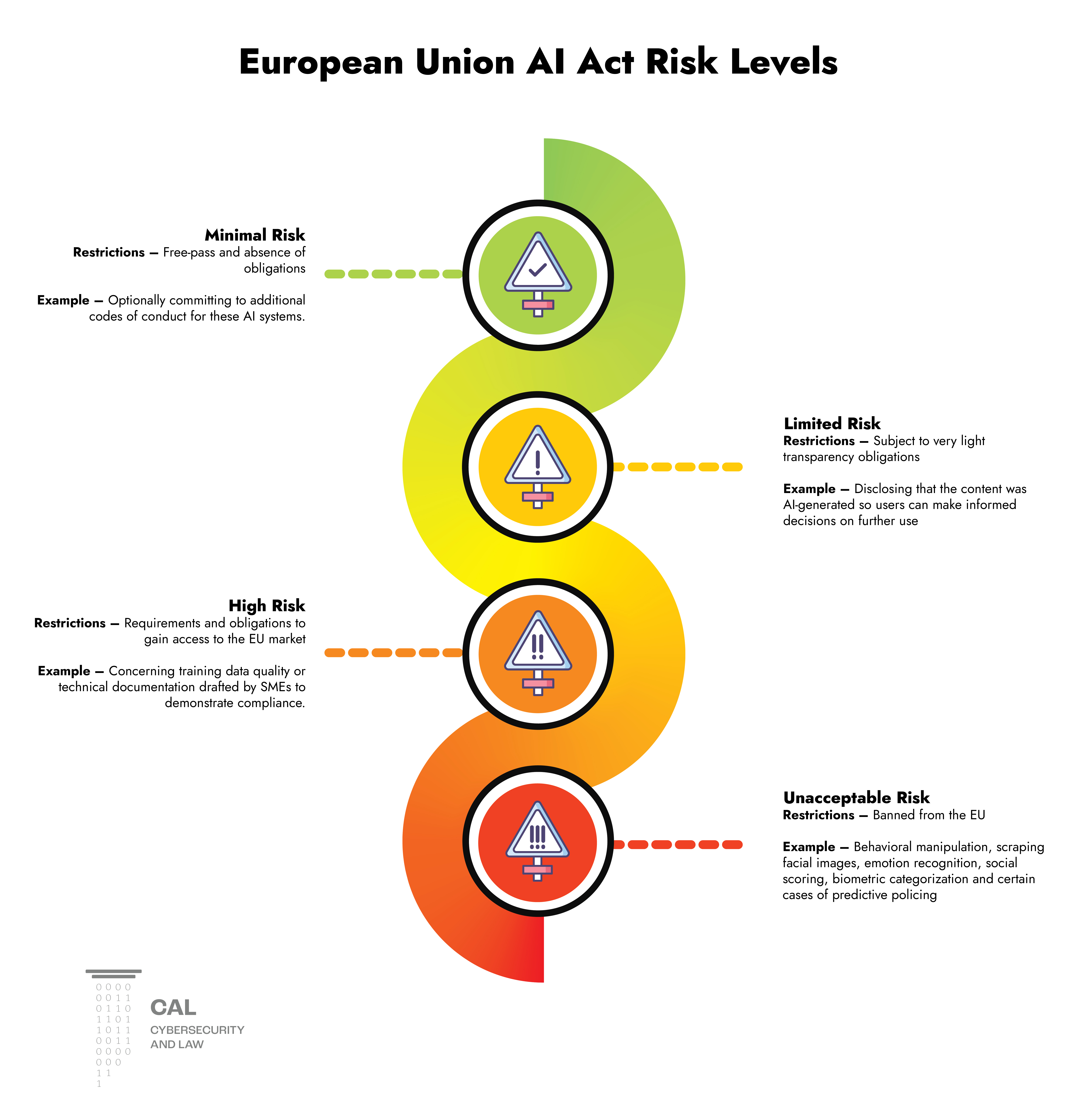

AI Act Risk Levels

To ensure a balance between innovation and protection, the AI Act regulates based on risk levels. Specifically, risk levels inform the strictness of the rules established by the AI Act. The risk levels, in order of most to least risk, include unacceptable risk, high risk, limited risk, and minimal risk.

Unacceptable Risk

Restrictions: Banned from the EU

Example: behavioral manipulation, scraping facial images, emotion recognition, social scoring, biometric categorization, and some instances of predictive policing

These AI systems are considered a threat to people. They pose the most risk to consumers of the three risk categories. The AI Act ensures the strictest regulations by banning these AI systems. Many AI systems developed or used today may fall under this category and thus, in their current form, will be banned from the EU.

Behavioral manipulation

Facebook Likes can be used to accurately predict sensitive personal attributes from sexual orientation to intelligence, happiness, use of addictive substances, and parental separation, according to an article from the Proceedings of the National Academy of Sciences. This level of insight from such easily accessible sources could lead to severe consequences when an AI system can access the extrapolated information. For example, knowing someone’s political leanings could make a misinformation campaign better targeted; similarly, bad actors could use AI systems with access to extrapolated information to harass people based on predicted personal attributes. Under the AI Act, the EU would ban applications of any program to extract this information.

One application of sensitive personal attributes identified with AI could include marketing or curated content presentations like news articles or social media posts. Additionally, AI can detect human biases, leading to a personalized strategy for consuming online goods, as demonstrated in this article. The system must take advantage of an individual’s mental state to personalize a strategy based on AI-detected biases. By understanding the mental state of users, organizations can alter or curate content to manipulate a user’s behavior. For example, presenting upsetting or sensational content aligned with a particular political philosophy, or, as another example, increasing the addictive nature of a program, leading to users continuing a behavior they would like to stop otherwise.

Scraping Facial Images

Clearview AI is a company that built a facial recognition database by scraping, aggregating, and processing more than 30 billion publicly available photos. Unique identifiers associated with the individual’s accounts, among other identifying information, are generated from the scraped photos. While law enforcement can use this technology to help find victims of abuse and trafficking or identify perpetrators of violent crimes, this technology has also led to wrongful arrests, including a case in Detroit and a case in New Orleans.

Emotion Recognition

Organizations can use AI to identify and stimulate human emotions by serving specific content at opportune moments. DeepFace is an AI framework that can generate predictions about an individual’s age, gender, facial expression–including quantitative indicators of anger, fear, neutral expressions, sadness, disgust, happiness, and surprise–and race/ethnicity based on one or more images. Quantitatively recognizing emotions could lead to positive outcomes, such as helping neurodivergent individuals with difficulty recognizing facial expressions. However, it could lead to manipulating consumers based on timed content delivery.

Social Scoring

Providers could develop AI systems to rank members of society based on physical and psychological attributes and develop a method to track and dynamically update the social score. The concept of social scoring is a trope in the dystopian sci-fi genre, and it typically ends with vast inequalities and power imbalances. The press release for the AI Act only briefly mentions social scoring; however, the previous draft of the AI Act in Amendment 40 defines social scoring as AI systems that “evaluate or classify natural persons or groups based on multiple data points and time occurrences related to their social behavior in multiple contexts or known, inferred or predicted personal or personality characteristics.” Developing social scoring may be further from a daily reality than other AI use cases, but the foresight to include this amendment could reduce any negative impacts on consumers from a social scoring system.

Biometric Categorization

According to the Council’s press release, biometric categorization refers to using AI to “infer sensitive data, such as sexual orientation or religious beliefs, and some cases of predictive policing for individuals.” Facebook likes can infer sensitive data, as mentioned in the section on behavioral manipulation above. This sensitive data, inferred through Facebook likes, has the potential to be used to categorize individuals. Developing categories based on sensitive attributes may lead to discrimination and bias, disproportionately affecting certain groups.

Predictive Policing

Entities using predictive policing technology often keep their use a secret or obfuscate it from the public. Theoretically, this approach could improve the chances of preventing crimes. Practically, however, it has led to biased policing and undesired outcomes for certain groups of people. The risk of corrupting the justice system with biased software outweighs the benefit of preventing certain crimes. Moreover, the risk analysis of implementing predictive policing becomes more problematic than beneficial when considering that predictions are often not specific but rather general, such as graphically defined boundaries on a map.

High Risk

Restrictions: requirements and obligations to gain access to the EU market

Example: risk-mitigation systems, high-quality of data sets, logging of activity, detailed documentation, clear user information, human oversight, and a high level of robustness, accuracy, and cybersecurity

The EU defined high risk AI systems to be AI systems that post “significant potential harm to health, safety, fundamental rights, environment, democracy and the rule of law.” High risk AI systems identified by the EU include AI systems that can influence the outcome of elections and voter behavior, as well as critical infrastructure applications.

Under the AI Act, small and medium enterprises (SMEs) must conduct adequate risk assessments and mitigation systems for high risk AIs. Additionally, to aid in the facilitation of these risk assessments in the parliament press release, it’s noted that “the agreement promotes so-called regulatory sandboxes and real-world-testing, established by national authorities to develop and train innovative AI before placement on the market.”

One example of a high risk AI is using AI to underwrite for insurance as in the case of Hiscox and Google. Generally, AI applied to insurance underwriting may result in efficient, accurate, and fairer outcomes than traditional methods. However, while it may be useful to use AI to underwrite for insurance, the consequence of denying insurance to a consumer due to algorithmic bias is not acceptable, and using AI to underwrite for insurance has the potential to impact consumers significantly.

Using AI in high risk scenarios could introduce unintentional biases through the training data, the method of development, or how it is deployed and lead to unacceptable consequences for consumers. However, organizations can mitigate the various attributes of high risk AI systems by keeping a log of training data to assure quality or demonstrating compliance in another manner included in the AI Act at the time of publication. Complying with these regulations could help ensure that society reaps the benefits of these AI applications while reducing the associated risks.

Limited Risk/Transparency Risk

Restrictions: subject to very light transparency obligations

Example: Disclosing that the content was AI-generated so users can make informed decisions on further use

Transparency risk AI systems–referred to as AI systems presenting only limited risk by the Council–would have to adhere to transparency obligations. For example, organizations using chatbot AI systems must tell consumers they are interacting with an AI chatbot. Additionally, the AI Act requires labeling or disclosing AI-generated content, including deep fakes and synthetically generated audio, video, text, and image content in a machine-readable format.

Requiring the disclosure of AI generated content is a step in the right direction. It will be difficult for the AI Office to enforce as generative AI tools are becoming more advanced and widespread.

Minimal Risk

Restrictions: free-pass and absence of obligations

Example: Optionally committing to additional codes of conduct for these AI systems.

According to the Commission’s press release most AI systems are minimal risk. Minimal risk applications include AI-enabled recommender systems like streaming services or spam filters that filter emails. Minimal risk applications will be permitted to operate unrestricted as these systems present minimal or no risk to citizens’ rights or safety.

Restriction Exceptions

While these regulations are intended to comprehensive, there are certain exceptions for law enforcement, related to real-time biometric identification systems (RBI), only to be used when conducting a “targeted search of a person convicted or suspected of having committed a serious crime.” These law enforcement exceptions include:

- Targeted searches of victims in cases including abduction, trafficking, or sexual exploitation

- Prevention of a specific and present terrorist threat

- Locating or identifying a person suspected of having committed one of the specific crimes mentioned in the regulation

Specifically, law enforcement may be able to use “post” remote biometric identification systems where identification occurs after a significant delay will be allowed to prosecute serious crimes but only after court approval.

Non-Compliance Penalties

The fines for violations of the AI act are either a percentage of the offending company’s global annual turnover in the previous financial year or a predetermined amount, whichever is higher:

| Offense | Percentage | Fixed |

|---|---|---|

| Violating banned AI applications | 7% | €35 million |

| Violation of AI Act’s obligations | 3% | €15 million |

| Supplying incorrect information | 1.5% | €7.5 million |

Additionally, there are more proportionate caps on these fines for small and medium-sized enterprises (SMEs) and start-ups, according to the Council’s press release.

What’s Next

After the Parliament and Council finalize the legislation, Parliament’s Internal Market and Civil Liberties committees will vote on the agreement in the next few months. Adopting the AI Act would mark a significant milestone for the European Union in pioneering comprehensive and horizontal AI regulation. After adopting this legislation in the EU, the Brussels Effect will ensue with countries worldwide implementing similar measures. Ideally, this landmark legislation fulfills its goal of maintaining and bolstering AI innovation while upholding consumer privacy and ethical standards, leading to a future where AI development and privacy preservation for consumers coexist.

Note: While my formal education is in computer science and cybersecurity, I‘m constantly learning more about cybersecurity and law. I’m also pursuing data privacy and data law as a career. This blog is a space for me to learn more about the intersections of cybersecurity and law and hopefully provide value to the community in the form of my insights, interpretations and infographics.

While, I aim to consider all angles of a topic sometimes I miss something. I welcome any and all corrections, insights, and perspectives from those with additional insights. If you’d like to provide feedback, point out an error/improvement, or request a topic, please feel free to send an email to feedback@cyberandlaw.com.

Thank you for spending your time with me on this path of discovery, and I hope we can all deepen our understanding of cybersecurity and legal matters together.

Leave a comment